Chapter 10 part 2 - The binary formats

The three binary formats supported by pg_dump are the custom, the directory and the tar format. The first two can be accessed randomly by the restore program and have the parallel restore support, being the best choice for having a flexible and reliable restore. Before the the 9.3 the only format supporting the parallel restore was the custom. With this version the directory format accepts the -j switch. This feature, combined with the parallel dump seen in 9.3 is a massive improvement for saving big amount of data. The tar format does have the limit of 12 GB in the archive’s file size and doesn’t offer the parallel restore nor the selective restore.

The custom format is a binary archive with a table of contents pointing the various archive sections. The directory format is a directory which name is the value provided with the -f switch. The directory contents are a toc.dat file, where the table of contents and the schema are stored. For each table there is a gzip file which name is a number corresponding to the toc entry for the saved relation. Those files store the data restore for the relation.

The restore from the binary formats requires the pg_restore usage. Because almost all the pg_dump’s switches are supported by pg_restore we’ll not repeat the look out. Take a look to 9.1 for the switch meanings. Anyway this is the pg_restore’s help output.

pg_restore restores a PostgreSQL database from an archive created by pg_dump.

Usage:

pg_restore [OPTION]... [FILE]

General options:

-d, --dbname=NAME connect to database name

-f, --file=FILENAME output file name

-F, --format=c|d|t backup file format (should be automatic)

-l, --list print summarized TOC of the archive

-v, --verbose verbose mode

-V, --version output version information, then exit

-?, --help show this help, then exit

Options controlling the restore:

-a, --data-only restore only the data, no schema

-c, --clean clean (drop) database objects before recreating

-C, --create create the target database

-e, --exit-on-error exit on error, default is to continue

-I, --index=NAME restore named index

-j, --jobs=NUM use this many parallel jobs to restore

-L, --use-list=FILENAME use table of contents from this file for

selecting/ordering output

-n, --schema=NAME restore only objects in this schema

-O, --no-owner skip restoration of object ownership

-P, --function=NAME(args) restore named function

-s, --schema-only restore only the schema, no data

-S, --superuser=NAME superuser user name to use for disabling triggers

-t, --table=NAME restore named table(s)

-T, --trigger=NAME restore named trigger

-x, --no-privileges skip restoration of access privileges (grant/revoke)

-1, --single-transaction restore as a single transaction

--disable-triggers disable triggers during data-only restore

--no-data-for-failed-tables do not restore data of tables that could not be

created

--no-security-labels do not restore security labels

--no-tablespaces do not restore tablespace assignments

--section=SECTION restore named section (pre-data, data, or post-data)

--use-set-session-authorization

use SET SESSION AUTHORIZATION commands instead of

ALTER OWNER commands to set ownership

Connection options:

-h, --host=HOSTNAME database server host or socket directory

-p, --port=PORT database server port number

-U, --username=NAME connect as specified database user

-w, --no-password never prompt for password

-W, --password force password prompt (should happen automatically)

--role=ROLENAME do SET ROLE before restore

If no input file name is supplied, then standard input is used.

Report bugs to <pgsql-bugs@postgresql.org>.

If the database connection is omitted pg_restore sends the output to

the standard output. The switch -f sends the output to a filename

though. This is very useful if we want to check the original dump file

is readable, executing a restore onto the /dev/null device.

The the custom and directory formats show their power when restoring on

a database connection in a multi core system. Using the -j switch it’s

possible to specify the number of parallel jobs for the data and the

post data section. This can improve massively the recovery time, running

the most time consuming actions in multiple processes.

The word parallel can be confusing in some way. PostgreSQL does not supports multithreading. That means each backend process will use just only one cpu. In this context, each job take care of a different area of the restore’s table of contents, The TOC is split in many queues with a fixed object list to process. For example one queue will contain the data restoration for a table, and the relation’s indices and constraints.

The switch -section offers a fine grain control on which section of the archived data will be restored. In a custom and directory format there are three distinct sections.

- pre-data This section restores only the schema definitions not affecting the speed and reliability of the data restore. e.g. table’s DDL, functions creation, extensions, etc.

- data The data restore itself, by default saved as COPY statements to speed up the process

- post-data This section runs the restore for all the objects enforcing the data integrity, like the primary and foreign keys, triggers and the indices which presence during the restore slow down the data reload massively.

The switch -C creates the target database before starting the restoration. To do this the connection must happen first on another database.

We’ll now will see how to restore the database seen in 10.1 in the same two steps approach, using the custom format.

Let’s start with a complete database dump using the custom format.

postgres@tardis:~/dump$ pg_dump -Fc -f db_addr.dmp db_addr

pg_dump: reading schemas

pg_dump: reading user-defined tables

pg_dump: reading extensions

pg_dump: reading user-defined functions

pg_dump: reading user-defined types

pg_dump: reading procedural languages

pg_dump: reading user-defined aggregate functions

pg_dump: reading user-defined operators

pg_dump: reading user-defined operator classes

pg_dump: reading user-defined operator families

pg_dump: reading user-defined text search parsers

pg_dump: reading user-defined text search templates

pg_dump: reading user-defined text search dictionaries

pg_dump: reading user-defined text search configurations

pg_dump: reading user-defined foreign-data wrappers

pg_dump: reading user-defined foreign servers

pg_dump: reading default privileges

pg_dump: reading user-defined collations

pg_dump: reading user-defined conversions

pg_dump: reading type casts

pg_dump: reading table inheritance information

pg_dump: reading event triggers

pg_dump: finding extension members

pg_dump: finding inheritance relationships

pg_dump: reading column info for interesting tables

pg_dump: finding the columns and types of table "t_address"

pg_dump: finding default expressions of table "t_address"

pg_dump: finding the columns and types of table "t_city"

pg_dump: finding default expressions of table "t_city"

pg_dump: flagging inherited columns in subtables

pg_dump: reading indexes

pg_dump: reading indexes for table "t_address"

pg_dump: reading indexes for table "t_city"

pg_dump: reading constraints

pg_dump: reading foreign key constraints for table "t_address"

pg_dump: reading foreign key constraints for table "t_city"

pg_dump: reading triggers

pg_dump: reading triggers for table "t_address"

pg_dump: reading triggers for table "t_city"

pg_dump: reading rewrite rules

pg_dump: reading large objects

pg_dump: reading dependency data

pg_dump: saving encoding = UTF8

pg_dump: saving standard_conforming_strings = on

pg_dump: saving database definition

pg_dump: dumping contents of table t_address

pg_dump: dumping contents of table t_city

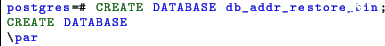

We’ll use a second database for the restore.

We’ll then restore just the schema using the following command.

postgres@tardis:~/dump$ pg_restore -v -s -d db_addr_restore_bin db_addr.dmp

pg_restore: connecting to database for restore

pg_restore: creating SCHEMA public

pg_restore: creating COMMENT SCHEMA public

pg_restore: creating EXTENSION plpgsql

pg_restore: creating COMMENT EXTENSION plpgsql

pg_restore: creating TABLE t_address

pg_restore: creating SEQUENCE t_address_i_id_addr_seq

pg_restore: creating SEQUENCE OWNED BY t_address_i_id_addr_seq

pg_restore: creating TABLE t_city

pg_restore: creating SEQUENCE t_city_i_id_city_seq

pg_restore: creating SEQUENCE OWNED BY t_city_i_id_city_seq

pg_restore: creating DEFAULT i_id_addr

pg_restore: creating DEFAULT i_id_city

pg_restore: creating CONSTRAINT pk_i_id_city

pg_restore: creating CONSTRAINT pk_id_address

pg_restore: creating FK CONSTRAINT fk_t_city_i_id_city

pg_restore: setting owner and privileges for DATABASE db_addr

pg_restore: setting owner and privileges for SCHEMA public

pg_restore: setting owner and privileges for COMMENT SCHEMA public

pg_restore: setting owner and privileges for ACL public

pg_restore: setting owner and privileges for EXTENSION plpgsql

pg_restore: setting owner and privileges for COMMENT EXTENSION plpgsql

pg_restore: setting owner and privileges for TABLE t_address

pg_restore: setting owner and privileges for SEQUENCE t_address_i_id_addr_seq

pg_restore: setting owner and privileges for SEQUENCE OWNED BY t_address_i_id_addr_seq

pg_restore: setting owner and privileges for TABLE t_city

pg_restore: setting owner and privileges for SEQUENCE t_city_i_id_city_seq

pg_restore: setting owner and privileges for SEQUENCE OWNED BY t_city_i_id_city_seq

pg_restore: setting owner and privileges for DEFAULT i_id_addr

pg_restore: setting owner and privileges for DEFAULT i_id_city

pg_restore: setting owner and privileges for CONSTRAINT pk_i_id_city

pg_restore: setting owner and privileges for CONSTRAINT pk_id_address

pg_restore: setting owner and privileges for FK CONSTRAINT fk_t_city_i_id_city

The dump file is specified as last parameter. The -d switch tells pg_restore which database to connect for the restore. By default the postgres user usually connects using the ident operating system daemon or the trust authentication method, when connected as local. That’s the reason why in this example there’s no need of specifying the username or enter the password.

The second restore’s step is the data load. In the example seen in 10.1 we used the pg_dump with -disable-triggers switch in order to avoid failures caused by constraint violation. With the custom format the switch is used at restore time.

postgres@tardis:~/dump$ pg_restore --disable-triggers -v -a -d db_addr_restore_bin db_addr.dmp

pg_restore: connecting to database for restore

pg_restore: disabling triggers for t_address

pg_restore: processing data for table "t_address"

pg_restore: enabling triggers for t_address

pg_restore: executing SEQUENCE SET t_address_i_id_addr_seq

pg_restore: disabling triggers for t_city

pg_restore: processing data for table "t_city"

pg_restore: enabling triggers for t_city

pg_restore: executing SEQUENCE SET t_city_i_id_city_seq

pg_restore: setting owner and privileges for TABLE DATA t_address

pg_restore: setting owner and privileges for SEQUENCE SET t_address_i_id_addr_seq

pg_restore: setting owner and privileges for TABLE DATA t_city

pg_restore: setting owner and privileges for SEQUENCE SET t_city_i_id_city_seq

However, this approach does not prevent the slowness caused by the indices when reloading the data. If a restore with multiple steps is required (e.g. creating the database schema and check all the relations are in place before starting) the section switch is a better choice. Let’s see how it works with the example seen before.

We’ll first restore the pre-data section10.1.

postgres@tardis:~/dump$ pg_restore --section=pre-data -v -d db_addr_restore_bin db_addr.dmp

pg_restore: connecting to database for restore

pg_restore: creating SCHEMA public

pg_restore: creating COMMENT SCHEMA public

pg_restore: creating EXTENSION plpgsql

pg_restore: creating COMMENT EXTENSION plpgsql

pg_restore: creating TABLE t_address

pg_restore: creating SEQUENCE t_address_i_id_addr_seq

pg_restore: creating SEQUENCE OWNED BY t_address_i_id_addr_seq

pg_restore: creating TABLE t_city

pg_restore: creating SEQUENCE t_city_i_id_city_seq

pg_restore: creating SEQUENCE OWNED BY t_city_i_id_city_seq

pg_restore: creating DEFAULT i_id_addr

pg_restore: creating DEFAULT i_id_city

pg_restore: setting owner and privileges for DATABASE db_addr

pg_restore: setting owner and privileges for SCHEMA public

pg_restore: setting owner and privileges for COMMENT SCHEMA public

pg_restore: setting owner and privileges for ACL public

pg_restore: setting owner and privileges for EXTENSION plpgsql

pg_restore: setting owner and privileges for COMMENT EXTENSION plpgsql

pg_restore: setting owner and privileges for TABLE t_address

pg_restore: setting owner and privileges for SEQUENCE t_address_i_id_addr_seq

pg_restore: setting owner and privileges for SEQUENCE OWNED BY t_address_i_id_addr_seq

pg_restore: setting owner and privileges for TABLE t_city

pg_restore: setting owner and privileges for SEQUENCE t_city_i_id_city_seq

pg_restore: setting owner and privileges for SEQUENCE OWNED BY t_city_i_id_city_seq

pg_restore: setting owner and privileges for DEFAULT i_id_addr

pg_restore: setting owner and privileges for DEFAULT i_id_city

Again the pg_restore loads the objects with the ownership and privileges. What’s missing is the constraints creation. The second step is the data section’s load.

postgres@tardis:~/dump$ pg_restore --section=data -v -d db_addr_restore_bin db_addr.dmp

pg_restore: connecting to database for restore

pg_restore: implied data-only restore

pg_restore: processing data for table "t_address"

pg_restore: executing SEQUENCE SET t_address_i_id_addr_seq

pg_restore: processing data for table "t_city"

pg_restore: executing SEQUENCE SET t_city_i_id_city_seq

pg_restore: setting owner and privileges for TABLE DATA t_address

pg_restore: setting owner and privileges for SEQUENCE SET t_address_i_id_addr_seq

pg_restore: setting owner and privileges for TABLE DATA t_city

pg_restore: setting owner and privileges for SEQUENCE SET t_city_i_id_city_seq

This section simply loads the table’s data and sets the sequence values. Apart for the ownership no further action is performed. Finally we’ll run the post-data section.

postgres@tardis:~/dump$ pg_restore --section=post-data -v -d db_addr_restore_bin db_addr.dmp

pg_restore: connecting to database for restore

pg_restore: creating CONSTRAINT pk_i_id_city

pg_restore: creating CONSTRAINT pk_id_address

pg_restore: creating FK CONSTRAINT fk_t_city_i_id_city

pg_restore: setting owner and privileges for CONSTRAINT pk_i_id_city

pg_restore: setting owner and privileges for CONSTRAINT pk_id_address

pg_restore: setting owner and privileges for FK CONSTRAINT fk_t_city_i_id_city

With this run the constrains (and eventually all the indices) are created in the best approach possible when dealing with the bulk data processing.

Loading the data contents without indices maximise the speed. The constraint and index build with the data already in place results in a faster build and a fresh index without any bloat.